A Repeated "Chicken Game" Experiment

Introducing Mixed Strategy Nash Equilibria, and the First Mover Advantage

Rules as explained to players:

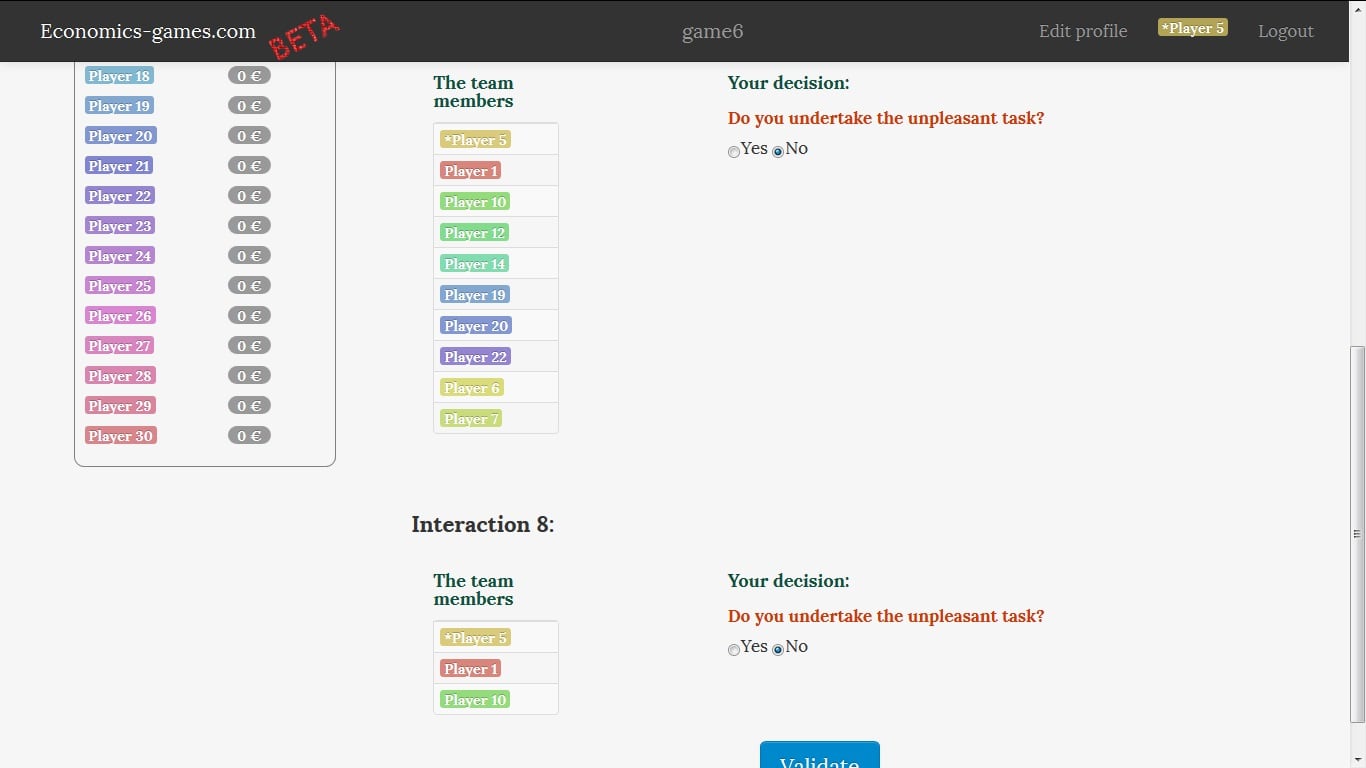

You will play this experiment repeatedly

You are part of a team working on a project. For the project to succeed, someone must undertake a particularly unpleasant task, for which nobody was specifically named as responsible. You must all decide simultaneously whether or not you undertake this task, without communicating.

Corresponding gains are determined as follows: The project's success is "valued" by each of you as € 20000. The unpleasant task is valued as being equivalent to a cost of € 13000 by the person who undertakes it. So if no one undertakes the task, the project is a failure and each team member has a payoff equivalent to € 0. If at least one person undertakes the task, the project is a success: those who have undertaken the task have a payoff equivalent to € 7000, the others a payoff equivalent to € 20000.

In fact, you will play two of these games at the same time, with random players from your class. The same players will remain in the same universes until the end.

Your overall payoffs are compared to those of everyone else in the class. Your goal is to maximize your payoff, not just to be better than the players you are faced with.

Game details:

Each player will take part to 2 experiments simultaneously.

Choose the number of players and the number of different universes: If there are 32 players and you choose 3 universes for the first repartition and 10 universes in the second, the students will be randomly spread across 3 universes (two with 11 students and one with ten) for the first experiment and across 10 small universes for the second experiment.

Having not enough universes can create an incentive problem here. In this kind of games, players pay usually more attention to their ranking than to their "absolute" pay-off, which distorts incentives (this is the reason why scientific experimental economics insists on giving actual money to the players). One way to alleviate this problem is to have several separate universes. Thus, a player with the best ranking in an extremely competitive experiment might end up with a bad overall ranking, and players have an incentive to also pay attention to their "absolute" score. This is especially important for this game: if there is only one or two universes, many players will never even consider the idea of undertaking the unpleasant task, knowing that it would increase the other players' scores more than theirs. So, the right number of universes is a trade-off between the need to have enough universes and the interest of having universes of different sizes. For a class of 20 students, for example, I would choose 10 small universes of 2 players for the first experiment, and 4 universes of 5 players for the second. With 40 students, I would recommend 20 universes for the first experiment and 6 or 7 universes for the second.

This is an interesting game for illustrating mixed-strategy Nash equilibria.

A Game Theory assignement around this game:

In a company, a team of n persons is working on a project. For the project to succeed, a specific task (an inspection task for example) must be undertaken by at least one person. This task is particularly unpleasant and nobody was clearly mandated to deal with it. We model the interest of team members through the following pay-offs. If nobody takes care of the inspection, all members have a 0 pay-off. If someone checks, it has a pay-off of 2, and all others have a pay-off of 10.

(a) What are the Nash equilibria in pure strategies?

(b) What is the symmetric mixed-strategy Nash equilibrium.(c) What is the success probability of the project for this equilibrium? How does this probability vary according to the size of the team? Comment.

(d) Assume now that, by its function, one of the team members (the "inspector") only decides whether or not to check after learning if others did. What are the perfect equilibria? What will happen if at the beginning of game, the inspector has the ability to credibly commit not to learn what others have done before deciding to check? if he can commit not to check? Why is it important that this commitment is credible?